Transcript of my talk at the EDI Day of ACM SIGKDD 2024 in Barcelona, August 2024.

The title of my talk is "A Late Non-Binary Transition." It's a personal story and an unusual story for this type of conference, but this is what I was invited to do, and this is what I'm going to do.

This is a personal story of my relationship with gender. Even though every non-binary person's story is different, and this is mine, I would assure you that I will tell you everything you need to know about being non-binary.

I want to issue a couple of trigger warnings. There is a mention of sexual abuse, without a description, and it didn't happen to me or to anyone close to me. There are also homophobic slurs.

I want to ask you, please do not take photos until the end of the talk, and please remind others if you see them taking photos. Out of context, pieces of this story may not make sense or may not be interpreted as I intend.

* * *

I was born in 1977 in Santiago de Chile. Chile is not an island, but it does feel like an island. It is surrounded to the north by the driest desert in the world, to the east by the tall mountains of the Andes, to the west, by the Pacific Ocean, and to the south, there is Antarctica. It's really a place where nobody goes by accident, and it's not on the way to anywhere else. So, it is pretty insular, and it is also a Catholic country, and I was born during a dictatorship.

I was born in 1977 in Santiago de Chile. Chile is not an island, but it does feel like an island. It is surrounded to the north by the driest desert in the world, to the east by the tall mountains of the Andes, to the west, by the Pacific Ocean, and to the south, there is Antarctica. It's really a place where nobody goes by accident, and it's not on the way to anywhere else. So, it is pretty insular, and it is also a Catholic country, and I was born during a dictatorship.

My parents were the first generation in their respective families to graduate from university, one as an engineer and one as a medical doctor.

I was born with brown skin: my mother comes from the north of Chile, from the area of the desert, and she is also dark skinned, so I was always "el negro", the black one.

I always thought I was particularly ugly, but now I realize I was just a brown kid in a racist society.

I was raised in the countryside, two hours south from Santiago de Chile, in a small town that is famous locally for the quality of its tomatoes, and very little else.

* * *

Children develop theories about gender, as much as they develop theories about everything else. In my case, I would see that men around me ate more, drank more alcohol, smoked more, cursed more, and were prone to hit walls or other people.

As many theories, you can get some elements wrong. Until I was a teenager, I believed that digital watches were for men and analog watches were for women because, accidentally, this is what I saw around me. For some reason, all the men in my family wore digital watches, and all the women analog watches. At some point, I discovered that my theory was wrong.

What I want to say is that many of these things about gender are not thought to us, they are simply something we observe in our environment, and those observations lead to theories that are sometimes wrong, as all theories.

* * *

I also learned homophobia as a child, when I was a very small kid, maybe 9 or 10 years old, and I learned it in at least three ways.

The first one was while I was watching TV with my parents on their bed on a Sunday morning. It was a movie about an American submarine being attacked by Japanese destroyers. During one of the attacks, a sailor panics and another sailor grabs him and kisses him on the lips. I was really shocked because I had never seen two men kiss. I was not horrified, but this was against my theories on gender, so I asked my father: Why did he kiss the other man?

My father told me: He kissed him because he was acting gay. It was his way of showing him that he was not acting like a man, that he was not being brave. That was my first brush with homophobia.

The second one was that I learned that the slur for gay man ("maricón") also means someone who betrays you, and the associated noun ("mariconada") means a betrayal or deception. Or that's what they used to mean in the '80s when I was growing up.

The third one was more serious. My parents had been in the communist party, and they were escaping persecution by keeping a low profile in a small town. Some of their friends would come visit sometimes, and I overheard a story about one of their friends, who was detained by military men from the regime. Once in jail, they told him: you are gay, and he said, I am not gay, and then they insisted he was gay, and raped him repeatedly. I was shocked, and what I learned from this was that being gay is a very dangerous thing to be accused of, even if you're not.

* * *

As I was growing up, I was a pampered know-it-all kid. My parents really were very protective of me, I had very good grades, and they had very high hopes for me. I was soft-mannered, as I have always been, and I was bullied relentlessly, as many of you perhaps were.

I hated gym class, but now that I'm a grown up, I realized that it wasn't because I didn't like physical exercise. I just didn't like being segregated by gender. The reason was that I didn't like to spend time with boys. It was tense, it was difficult, it was annoying, and it was dangerous. Countryside boys were strong, they knew how to work the land, how to work with tools, and they knew how to fight with their fists. Time spent with them was time spent being small and avoiding fist fights because I would lose.

I preferred to spend time playing with the girls, not only because they wouldn't fight so much, as there was always some level of aggression, but it was low-key. I enjoyed quieter and softer activities, and I enjoyed girls' games, making things with plant fibers or flowers, or singing, or playing simple things with cards. I really enjoyed those games more than the boys' games. I enjoyed spending time with girls.

But I was not a girl, and that was pretty confusing.

* * *

My childhood and teen years were the '80s and early '90s, and it was the peak of the HIV epidemic. HIV was associated with gays, and an AIDS diagnosis was a death sentence. In the '80s, the time from a diagnosis of AIDS to death was on average three years.

I was still soft-spoken and mild-mannered, and I was read as gay. That attracted more bullying. I don't think I was gay. I was just bad at being a man, bad at being manly.

At that point in my life, I only had two options. One of them was to try to mask and be a little bit more manly. I knew how to do it because I would just have to imitate children from the countryside. The other option was to come out as gay. Coming out as gay is always very difficult, but it's particularly difficult if you're not gay.

I also saw that option, of coming out as gay ... it was like crossing to the other side of a road, where there will be no women, and there will be much more bullying. It didn't make sense as a choice to me.

I was trapped in this binary between two options that were impossible for me to hold, somehow stuck between the two.

* * *

I studied in an engineering school where 85% of the students were men. Thirty years later, that figure is closer to 70%. There was rampant sexism and homophobia, and I have to say I was part of the sexism and the homophobia as any other male in the school.

I got my PhD and married at age 27 and emigrated shortly after.

* * *

Then, I did not think about gender for the next 15 years.

I spent those years working my ass off, cranking out papers like churros. Some of them were good, which happens if you produce enough. My hands would hurt constantly.

And I had my wife. My wife is a PhD in bioethics, an animal rights activist, and a published author. She supported me throughout all this process. She provided everything. She provided friendship, social connections; she was my emotional support, my travel agent, everything. And I cultivated very few friendships or activities on my own. I was just very focused on my work and little else.

And I didn't think about gender. The way in which I didn't think about gender resonates with a story attributed to David Foster Wallace:

There are these two young fish swimming along, and they happen to meet an older fish swimming the other way, who nods at them and says, “Morning, boys. How’s the water?” And the two young fish swim on for a bit, and then eventually one of them looks over at the other and goes, “What the hell is water?”

Gender is this thing that we are surrounded with. If it benefits you, if it allows you to swim, then you don't see it. You see it when it starts to be turbulent or pushes against you.

* * *

Gender as a social construct, what conservatives call gender ideology, is this idea that gender is a separated category from sex.

One of the best explanations that I have seen about gender as a social construct is a metaphor, a hypothetical description of a different planet. On that planet, about half the population are born men and about half are born women, also about half are "small" (say, shorter than 1.60 m) and about half are "big" (say, taller than 1.80 m), with very few people in the middle.

Men and women dress similarly and lead very similar lives along most axes.

But: most doctors are small and most nurses are big, most engineers are small and most teachers are big, most presidents and CEOs are small, and there is a sizable pay gap in favor of those who are small, which most of those who are small attribute to physiological differences that come with height.

This is the best explanation I have seen about how things that we often associate with gender, like wearing or not wearing a skirt, or wearing or not wearing makeup, preferring one toy over another, one color over another, or one profession over another, etcetera, many of those things are arbitrary. However, if you benefit from the fact that there is a difference, and you try hard, you can definitely connect it to a biological difference. Otherwise, most of them are truly arbitrary.

* * *

I came back to Catalonia in 2016 and there were three things that rocked my conceptions about gender.

I came back to Catalonia in 2016 and there were three things that rocked my conceptions about gender.

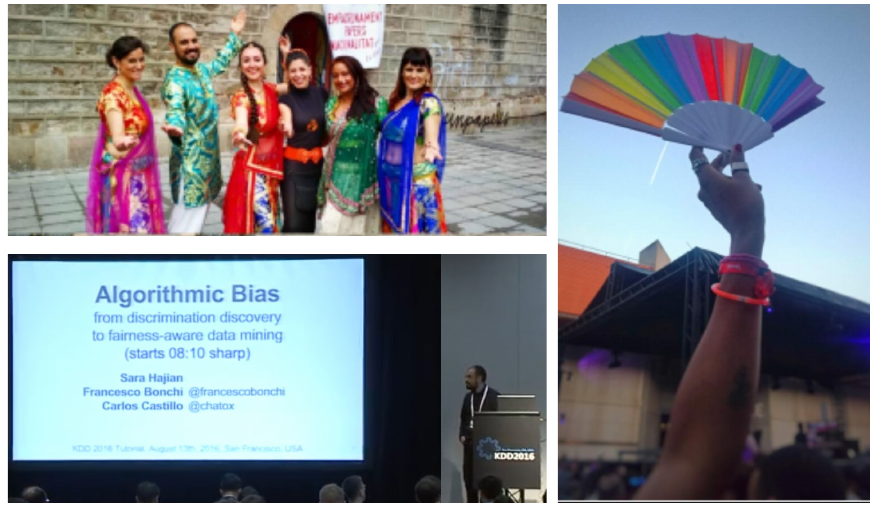

One is that I started dancing Bollywood dances. I found Bollywood music, the music with Indian roots that is played in Bollywood films, fun and uplifting. It lifted my spirit. So I entered an academy and I learned Bollywood dances, and I was the only man in the academy. There were 75 people.

The second thing was that together with Francesco Bonchi and with Sarah Haijan we gave a KDD tutorial in 2016 in San Francisco about algorithmic fairness. It was one of the earliest tutorials of that kind.

The third thing was that I had more LGBTQ friends. Once, I was talking to one of them, she's a moral philosopher, and I told her, look, I'm learning about feminism, but I don't want to do another PhD. Please give me short stuff to read that I can learn easily, and I can become a feminist the easy way. And she told me: Yes, that is exactly what male privilege is. Sexism doesn't harm you, and that's why you don't want to invest too much time learning about it.

I didn't do a PhD in feminism. I don't intend to do it, but I read a lot about gender and I believe this is time well spent.

* * *

And feminism, and thinking about gender, led to other things, as many things lead to other things. In my case, this led to non-monogamy and to a bisexual awakening.

Which led to friends.

For the longest time, I was very scared of having a close connection with any women who was not my wife. In my culture, in the way I was raised in Chile in the 1980s, this was seen as something that a married man should not do. Therefore, I didn't do it. And the problem was that I don't have many male friends and I still don't have them. Maybe I don't want them. I wanted to be with the girls, as usual. So, non-monogamy allowed me to have friends finally, because the friends I wanted were women or at least were not men, and now I could give myself permission to spend quality time with them.

* * *

You know when right-wing politicians say that all these things about gender are a slippery slope?

They are right.

Queerness is an attractor in the space of possibilities, and that's what I have experienced.

There is this thing called gender dysphoria, which is when you feel that your gender presentation or the way the world treats you does not match your gender. But you can also experience gender euphoria, and I have been experiencing gender euphoria over the past few years. It has been wonderful.

* * *

Since my 20s I have liked techno, particularly industrial techno. In my 20s I also liked gothic rock and Brit pop. What these genres have in common is that you can wear eyeliner to the concerts and to the parties. And this is what I would do. I also started wearing eye shadow to parties and at some point, a gay friend told me I look queer and I loved it. I loved the way he said it. I liked the way I looked.

I would also paint my nails black sometimes, and in my late 30s, I met a friend who did a little nail stamping for me. I looked at it and found it wonderful. I have never been good at any sort of artistic self-expression. I tried drawing, painting, making music; I have tried a lot of things and it is all bland. Everything I produce is bland and I don't like it.

I would also paint my nails black sometimes, and in my late 30s, I met a friend who did a little nail stamping for me. I looked at it and found it wonderful. I have never been good at any sort of artistic self-expression. I tried drawing, painting, making music; I have tried a lot of things and it is all bland. Everything I produce is bland and I don't like it.

But manicure and nail stamping were things that really resonated with me, and that I really enjoyed doing. To this day, I feel like I'm competent at doing a reasonable manicure, and at doing nail art with traditional nail polish.

Something that happened to me often was that I would wear nail polish over the weekend and then on Sunday night, I would be like up and down my home thinking, okay, should I take this off or can I leave this on and wear nail polish to work? And one day I decided, okay, I'm gonna do it, but on Monday morning before leaving home, I removed the nail polish because I got scared.

And then I went to a meeting in Montreal, and I wore nail polish to that meeting and I asked some friends if that was okay, and I got an answer from a researcher who told me: nobody cares. Nobody cares, that was a wonderful answer.

* * *

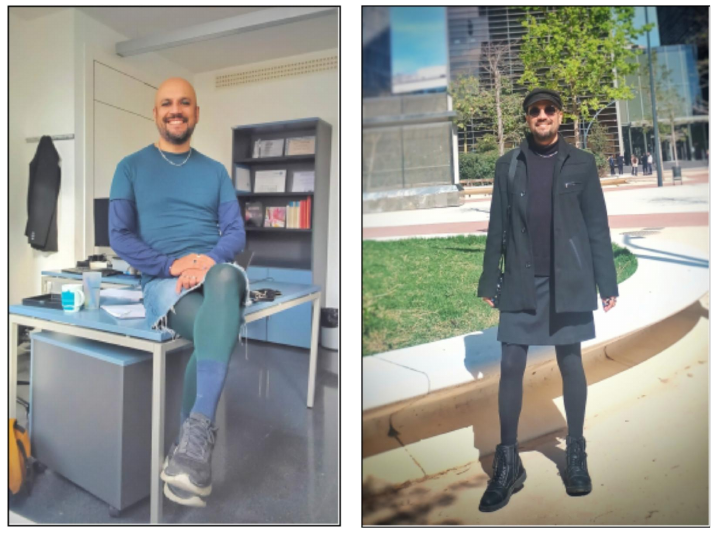

Festivals are a space of experimentation. Think of Burning Man, you see a lot of people wearing skirts. I started doing this at other festivals. In one of the first festivals I attended wearing a skirt, a photographer told me, oh, I'm looking for interesting people in the festival, and she snapped a photo of me and published it in Timeout magazine.

Festivals are a space of experimentation. Think of Burning Man, you see a lot of people wearing skirts. I started doing this at other festivals. In one of the first festivals I attended wearing a skirt, a photographer told me, oh, I'm looking for interesting people in the festival, and she snapped a photo of me and published it in Timeout magazine.

Not wearing a skirt before is something I regret. I don't regret many other things; my life has been nice and productive. But not wearing a skirt before, oddly, is something that I do regret because it makes me happy, and it's kind of playful, and it creates a certain way of behaving, and it induces in others certain expectations that are much more aligned with what I am.

And that's what I very rarely wear trousers now.

* * *

This was not planned. I usually do things, like many of you, according to a plan. But this was not planned at all. Really, everything I did was to listen carefully to how I felt, what I wanted, and to slowly ease myself into the things I wanted to do. It was not planned and I didn't have an objective. I still don't have it.

At some point, I wondered, do I want to transition and be a woman? And I thought, I don't know. Maybe not now. But masculinity felt to me like a burden. I didn't want to carry it anymore, and the less I carry it, the happier I am.

To me, masculinity is just an aesthetic. All the values that you can associate with masculinity, like courage, physical strength, resilience, all of those are great for everybody, not only for men. And all the values that are not good for everybody, like aggression or competitiveness, are the reason why we cannot have nice things. So that's how I feel about masculinity today.

* * *

I waited until I got my tenure two years ago to really be more queer openly and express differently in public and at work. I don't think it was necessary. I think my colleagues will not have objected by their reaction now. But at that time, I didn't know, and I had spent a big chunk of my life working on this and I did not want to ruin it by being queer.

I waited until I got my tenure two years ago to really be more queer openly and express differently in public and at work. I don't think it was necessary. I think my colleagues will not have objected by their reaction now. But at that time, I didn't know, and I had spent a big chunk of my life working on this and I did not want to ruin it by being queer.

I love the expectations of behavior that this creates around me. They are much more aligned to who I am.

My colleagues have said nothing. Neither my colleagues nor my students; nobody has said anything, anything. I sometimes wonder if I can wear a clown nose and come to work and see what happens, because I have got like zero reaction from my colleagues, which is weird.

To me, this speaks of some discomfort. If people felt really comfortable, they will say something, but there is a level of discomfort, but not enough. So I'm in this valley of discomfort where if it were worse, they will say something, and if it were easy for them, they will also say something. But given that this is slightly uncomfortable, they don't say anything, which is okay.

This is not a call for you to comment on my appearance.

* * *

I had many doubts along the way. I would leave home, and I would look at myself in the mirror of the elevator and think, is this a person that has a mental health problem? This person I'm looking at. Is this a person with a mental health problem?

I very rarely think that now, but there was a time where this was a recurring thought.

* * *

In this path, the less masculine I am, the happier, freer I feel. I feel connected with attitudes, with values that I was running away from because they will attract mockery and violence, especially in my formative years. But now I'm connected with those things.

In this path, the less masculine I am, the happier, freer I feel. I feel connected with attitudes, with values that I was running away from because they will attract mockery and violence, especially in my formative years. But now I'm connected with those things.

And I don't want to change a set of expectations by another. I don't want to become a woman and I don't want to go back to being a man. I'm really okay with the way I am now because it generates the right expectations, and it feels good for me.

* * *

I look at myself in the mirror and I feel pretty. That is something I never experienced before. I like the way I look.

Gay guys and straight girls, they find me too feminine, but among my people, among queer people, I'm fire. I have received more attention than ever, I have to manage an abundance of attention.

If I go to a party or a festival, I am frequently stopped by people complimenting me, and among those compliments, there is one that happens often. Someone would look at me up and down and say: "I like … I like … everything!" And I love that expression, and I expect it because it happens often.

* * *

There is this band called The XX, based in London, and their lead singer is queer, her name is Romy. This summer at Primavera Sound, I listened to Romy singing "Enjoy Your Life", a song from her first solo album.

And the chorus goes: "my mother says to me, 'enjoy your life'."

My mother never told me to enjoy my life. My mother told me what your mothers probably told you, which is to study and be productive.

I was in tears.

* * *

So, I will conclude by saying this: being non-binary allows me to enjoy my life.

And that is everything you need to know about being non-binary.

Barcelona, August 2024

P.S. If you want to be an ally to gender non-conforming people:

- Learn about gender as a social construct, specially if you have benefited from it.

- Stop whining about having to learn new words — we constantly do it to develop new concepts in science.

- Understanding can wait, but kindness, when delayed, is unkind.

- Ask for pronouns and honor them, and if you make a mistake, apologize very briefly and move on.

- Do not ask questions about their bodies to people who are not your close friends.